A recent report by the McKinsey Global Institute1) suggests that investment in artificial intelligence (AI) is growing fast. McKinsey estimates that digital leaders such as Google spent between “USD 20 billion to USD 30 billion on AI in 2016, with 90 % of this allocated to R&D and deployment, and 10 % to AI acquisitions”. According to the International Data Corporation2) (IDC), by 2019, 40 % of digital transformation initiatives will deploy some sort of variation of AI and by 2021, 75 % of enterprise applications will use AI, with expenditure growing to an estimated USD 52.2 billion.

From perception to reality

But what exactly is AI? According to Wael William Diab, Chair of the new technical committee ISO/IEC JTC 1, Information technology, subcommittee SC 42, Artificial intelligence, the field of AI includes a collection of technologies. The newly formed committee has started with some foundational standards that include AI concepts and terminology (ISO/IEC 22989). Diab stresses that the interest in AI is quite broad, bringing together a very wide range of diverse stakeholders such as data scientists, digital practitioners, and regulatory bodies. He also points out that there’s something of a gap between what AI actually is today and what it is often perceived to be. “People tend to think of AI as autonomous robots or a computer capable of beating a chess master. To me, AI is more of a collection of technologies that are enabling, effectively, a form of intelligence in machines.”

He also explains that AI is often seen as a group of fully autonomous systems – robots that move – but, in reality, much of AI goes into semi-autonomous systems. In many AI systems, a good deal of data will have been prepared before being fed into an engine that has some form of machine learning, which will then, in turn, produce a series of insights. These technologies can include, but are by no means limited to, machine learning, big data and analytics.

Umbrella of technologies

Currently a Senior Director of Huawei Technologies, Diab is Chair of the ISO/IEC subcommittee for good reason. Armed with several degrees in electrical engineering, economics and business administration from both Stanford and Wharton, his professional life has focused closely on business and technology strategy. Moreover, he has also worked for multinational conglomerates Cisco and Broadcom as well as been a consultant specializing in Internet of Things (IoT) technologies, most recently as the Secretary of the Steering Committee of the Industrial Internet Consortium. He has also filed over 850 patents, of which close to 400 have been issued, with the rest under examination. That’s more patents than those filed by Tesla – and not one of his applications has been rejected.

Diab’s true specialism lies in the breadth of his expertise – his range stretches from the early incubation of ideas to strategically driving the industry forward. It’s also why he’s so keen on standardization, as he sees it as the perfect vehicle for the healthy expansion of the industry as a whole. He argues that we need standards for AI for several reasons. First, there’s the degree of sophistication of IT in today’s society. After all, an average smartphone now has more power than all of the Apollo missions combined. Second, IT is moving deeper and deeper into every sector. After a slow start in the 1970s and 80s, people no longer need IT systems merely for greater efficiency and it is now needed to reveal operational and strategic insights. Finally, there is the sheer pervasiveness of IT in our lives. Every sector relies on it, from finance to manufacturing to healthcare to transportation to robotics and so on.

Part of the solution

This is where International Standards come into play. Subcommittee SC 42, which is under joint technical committee JTC 1 of ISO and the International Electrotechnical Commission (IEC), is the only body looking at the entire AI ecosystem. Diab is clear that he and his committee are starting with the recognition that many aspects of AI technology standardization need to be considered to achieve wide adoption. “We know that users care deeply and want to understand how AI decisions are made, thus the inclusion of aspects like system transparency are key,” he says, “so comprehensive standardization is a necessary part of the technology adoption.”

The AI ecosystem has been divided into a number of key areas spanning technical, societal and ethical considerations. These include the following broad categories.

Foundational standards

With so many varying stakeholders, a basic starting point has been the committee’s work on “foundational standards”. This looks at aspects of AI that necessitate a common vocabulary, as well as agreed taxonomies and definitions. Eventually, these standards will mean that a practitioner can talk the same language as a regulator and both can talk the same language as a technical expert.

Computational methods and techniques

At the heart of AI is an assessment of the computational approaches and characteristics of artificial intelligence systems. This involves a study of different technologies (e.g. ML algorithms, reasoning, etc.) used by the AI systems, including their properties and characteristics as well as the study of existing specialized AI systems to understand and identify their underlying computational approaches, architectures, and characteristics. The study group will report on what is happening in the field and then suggest areas in which standardization is required.

Trustworthiness

One of the most challenging topics for the industry is that of “trustworthiness”, the third area of focus. This goes straight to the heart of many of the concerns around AI. The study group is considering everything from security and privacy to robustness of the system, to transparency and bias. Already with AI, there are systems that are either making decisions or informing individuals about decisions that need to be made, so a recognized and agreed form of transparency is vital to ascertain that there is no undesirable bias. It is highly likely that this study group will set out a whole series of recommendations for standardization projects. Such work will provide a necessary tool and proactively address concerns in this area. “By being proactive in recognizing that these issues exist and standards can help mitigate them, that’s a huge departure from how transformative technologies were done in the past, which were more of an afterthought,” Diab says firmly.

Use cases and applications

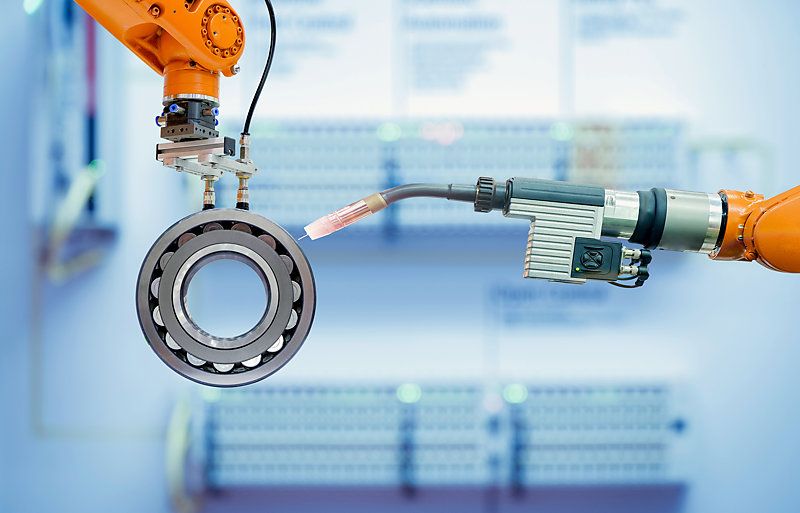

The fourth area of focus is to identify “application domains”, the contexts in which AI is being used, and collect “representative use cases”. Autonomous driving and transportation, for instance, is one such category. Another example is the use of AI in the manufacturing industry to increase efficiency. The group’s reports will lead to the commencement of a series of projects that could include everything from a comprehensive repository of use cases, to best practices for certain application domains.

Societal concerns

Another area of focus is what Diab terms “societal concerns”. Broad technologies like IoT and AI have the ability to influence how we exist for generations to come, so their adoption creates impacts that go much further than the technology itself. One of these is economic considerations, such as AI’s impact on the labour force (which naturally goes beyond the remit of the committee). But others certainly do fall into its purview: issues such as algorithmic bias, eavesdropping, and safety directives in industrial AI are all central to what the committee must look at. How, for instance, should an algorithm be safely trained – and then, when necessary, re-trained – to function properly? How do we prevent an AI system from correlating the “wrong” information, or basing decisions on inappropriately biased factors such as age, gender or ethnicity? How do we make sure that a robot working in tandem with a human operator doesn’t endanger its human colleague?

SC 42 is looking at these aspects of societal concern and ethical considerations throughout its work, and collaborating with the broader committees underneath its parent organizations, ISO and IEC, on items that may not be under the “IT preview” but impacted by it.

Big data

A few years ago, JTC 1 established a programme of work on “big data” through its working group WG 9. Currently, the big data programme has two foundational projects for overview and vocabulary and a big data reference architecture (BDRA), which have received tremendous interest from the industry. From a data science perspective, expert participation, use cases and applications, future anticipated work on analytics, and the role of systems integration, the big data work programme shares a lot of commonalities with the initial work programme for SC 42. From an industry practice point of view, it’s hard to imagine applications where one technology is present without the other. For this and many other reasons, the big data programme has been transferred to SC 42. The committee will focus on how to structure the work at its next meeting. It is also anticipated that new work products for big data will be developed.

Exponential growth

The field of AI is evolving very quickly and expanding so much that the application of the standards being developed by SC 42 will continue to grow along with the work programme of the committee. Diab foresees many more standards taking shape, especially in areas that have broad appeal, applicability and market adoption.

And it’s also because of these standards that Diab is certain AI adoption will not only be successful, but is one of those major technology inflection points that will change how we live, work and play.

1 ) McKinsey Global Institute, Artificial Intelligence: The Next Digital Frontier?

2 ) IDC, US Government Cognitive and Artificial Intelligence Forecast 2018-2021